Hi All,

My data has 2 integers and all other polynomial attributes

id

state

year

month

leads (int)

responses (int)

typeOfMail

status

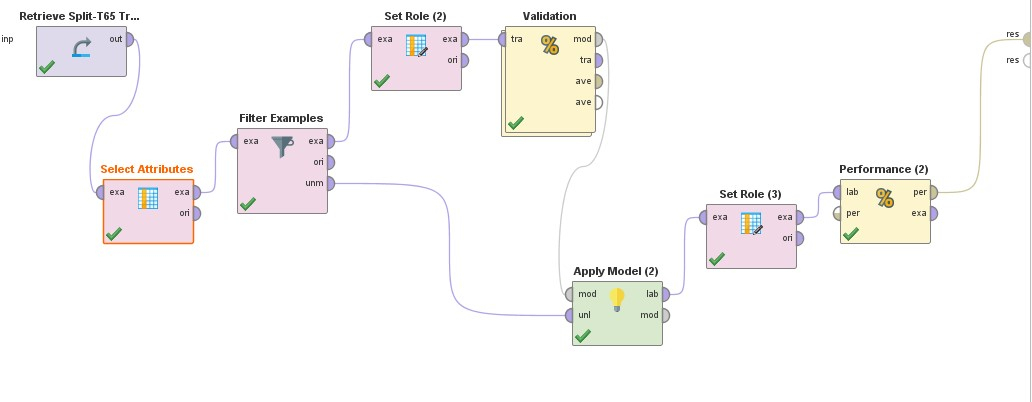

I used split model where I split my data between 20 and 2 months for 22 months and I got 12.41 RSME and

squared_error: 154.176 +/- 335.663.

I don't know how to reduce this and also not sure if I can apply any other models because I believe my options are limited

I already tried using other combinations in applying these models like adding K-NN and decision tree but that didn't help

Also, tried to split data between 18 and 4 months for total of 22 which didn't help either

what should I do?