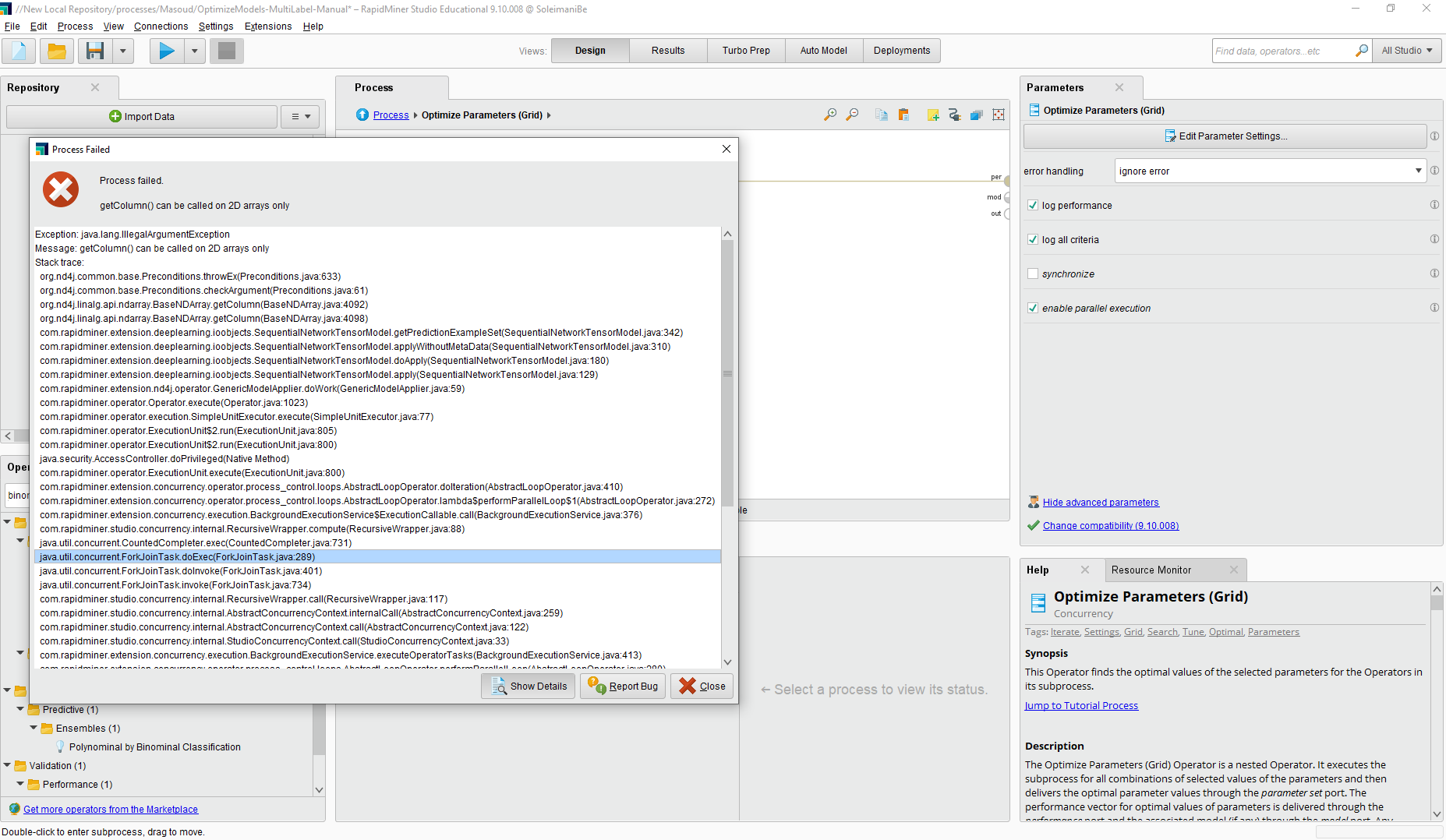

Unfortunately, the Deep Learning module can not send its exception gentle and it fails the entire process, so, I can not use it in Optimize operators like Gird Search, because the entire process will fail by a configuration error!

Besides, its errors are not clear and I can't understand how should I solve the problem usually there is no documentation or similar case because of various architecture and configurations for deep learning layers.