I just trained a machine using a Decision Tree, that reached an F-score of 99,7%.

Which sounds good until you hear, that naive bayes only got 66,4%

the highest score on that dataset I found was 98,2% using deep learning

The highest CREDIBLE score I found on that dataset was 78,5%

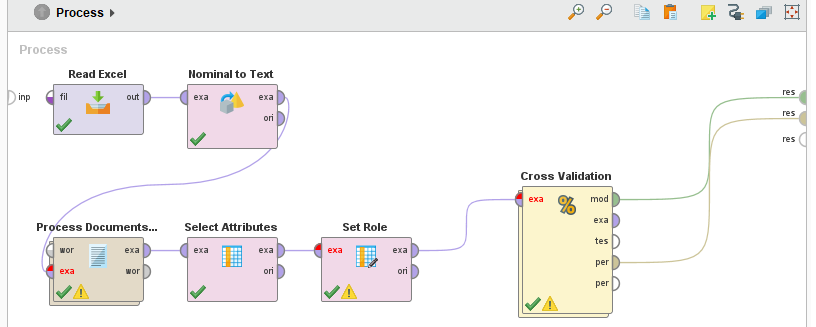

The design is based off of this video:

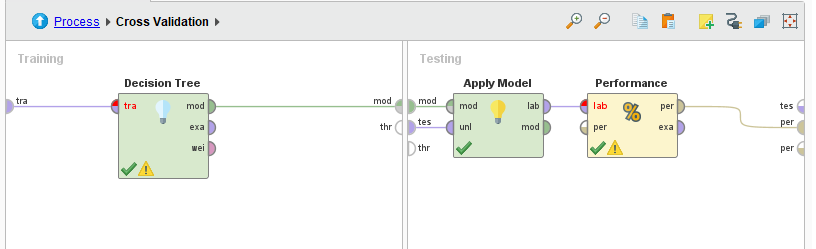

All I did was replace the Naive Bayes operator in the Crossvalidation with the Decision Tree operator.

Even with 10-fold Crossvalidation I should still not get much more than 70%...

The immediate cause of the high score is, that for some reason there is a strong correlations between the label and the id, however I do not know how to limit which collumns the algorithm uses.

The question is, what did I do wrong? How do I make it right?