Hello,

Is there anybody who can solve me this problem?

in the first picture I have this:

Here I measure the performance on the same data, and the accuracy is 87,44%.

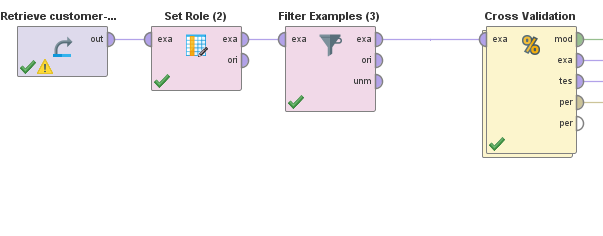

When I have the same procedure but inside cross validation like this:

(inside cross validation)

The accuracy I have here is 82.11%.

It is about the same procedure but inside a cross validation operator.

Why there is that difference on two cases?

What I have understand is that because in the second case my model is being trained and then it measures the performance in the testing section so it is more accurate.

So more training doesn't always means greater accuracy?

I hope my question is clear.

Thanks in advance.