Hello,

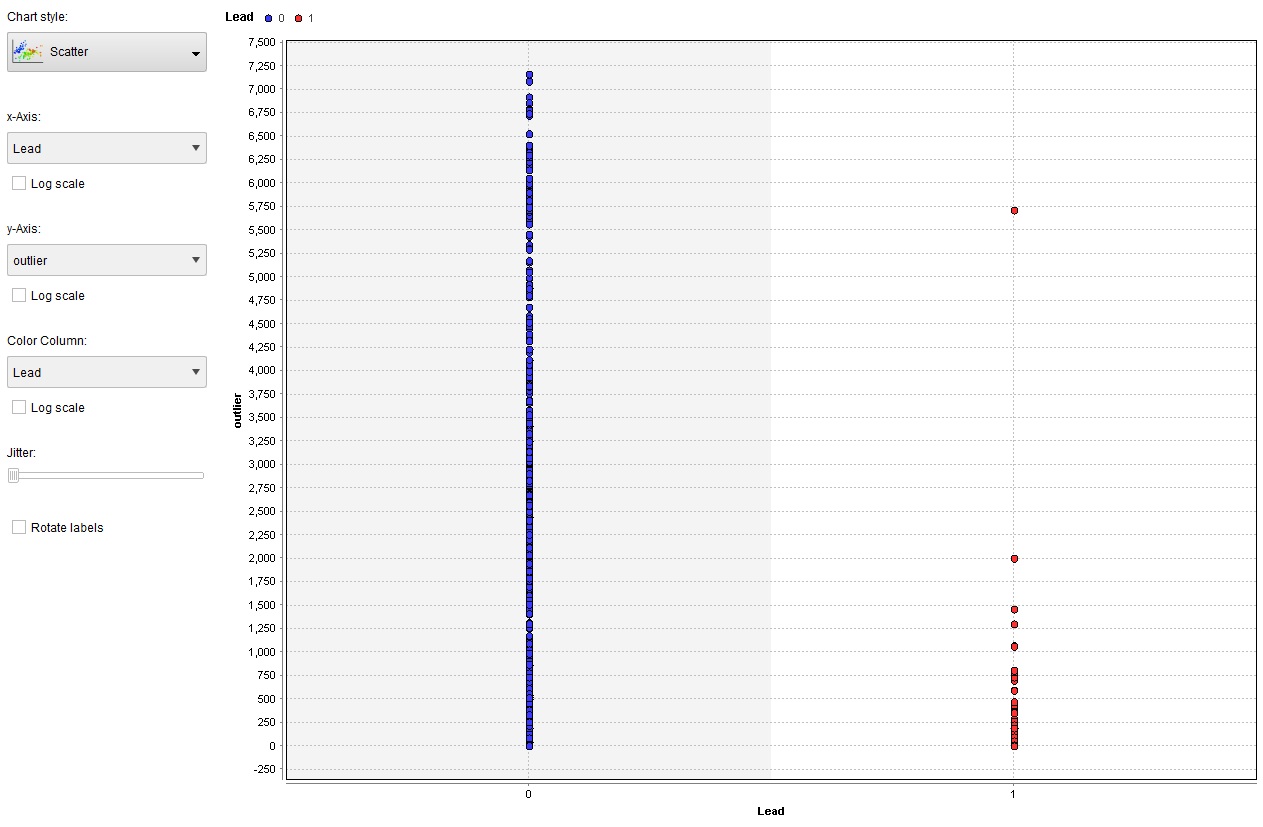

I am looking for confirmation on how I handled identifying a set of reliable negative examples from unlabeled data. I have seen numerous methods performed; however, nothing specific about the approach I took (which isn’t to say it isn’t out there, I just haven’t seen it throughout my research). Some background: the dataset that I’m working with has a set of positive examples (n = 964) and a larger set of unlabeled data (n = 8,107). I do not have a set of negative examples. I have attempted to run a one-class SVM but am not having any luck. So, I decided to try to identify a set of reliable negatives from the unlabeled data to be able to run a traditional regression model. The approach I took was to run a local outlier factor (LOF) on all of my data to see if I could isolate a set of examples that have an outlier score that is completely different than my positive examples. The chart below shows the distribution of scores between my positive class (lead = 1) and my unlabeled data (lead = 0). As can be seen, the positive examples (minus the 1 example with an outlier score near 5,750) are all clustered at or below an outlier score of 2,000.

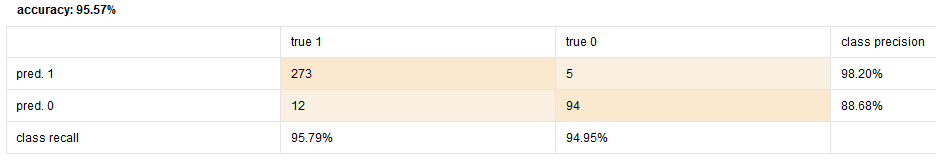

From here, I selected all of the examples from this output where lead = 0 (to ensure I’m only grabbing unlabeled data) and outlier score ≥ 2001 (the highest lead outlier score is 2,000.8) to use as my set of reliable negative examples (n = 315). I then created a dataset of all of my positive examples plus the set of reliable negatives (n = 1,279) and fed this into a logistic regression model. I am getting really great performance out of both the training and testing sets, which is making me question my methods. Here is the training performance table:

- Training AUC: 0.968 +/- 0.019 (mikro: 0.968)

Here is the testing performance table:

My questions are:

- Does the logic behind how I identified a set of reliable negatives makes sense? Are there any obvious (or not so obvious) flaws to my thinking?

- By constructing my final dataset in this way, am I making it too easy for the model to correctly classify examples?

Thank you for any help and guidance.

Kelly M.