hi all,

I have a classification case, wherein I use Logistic regression.

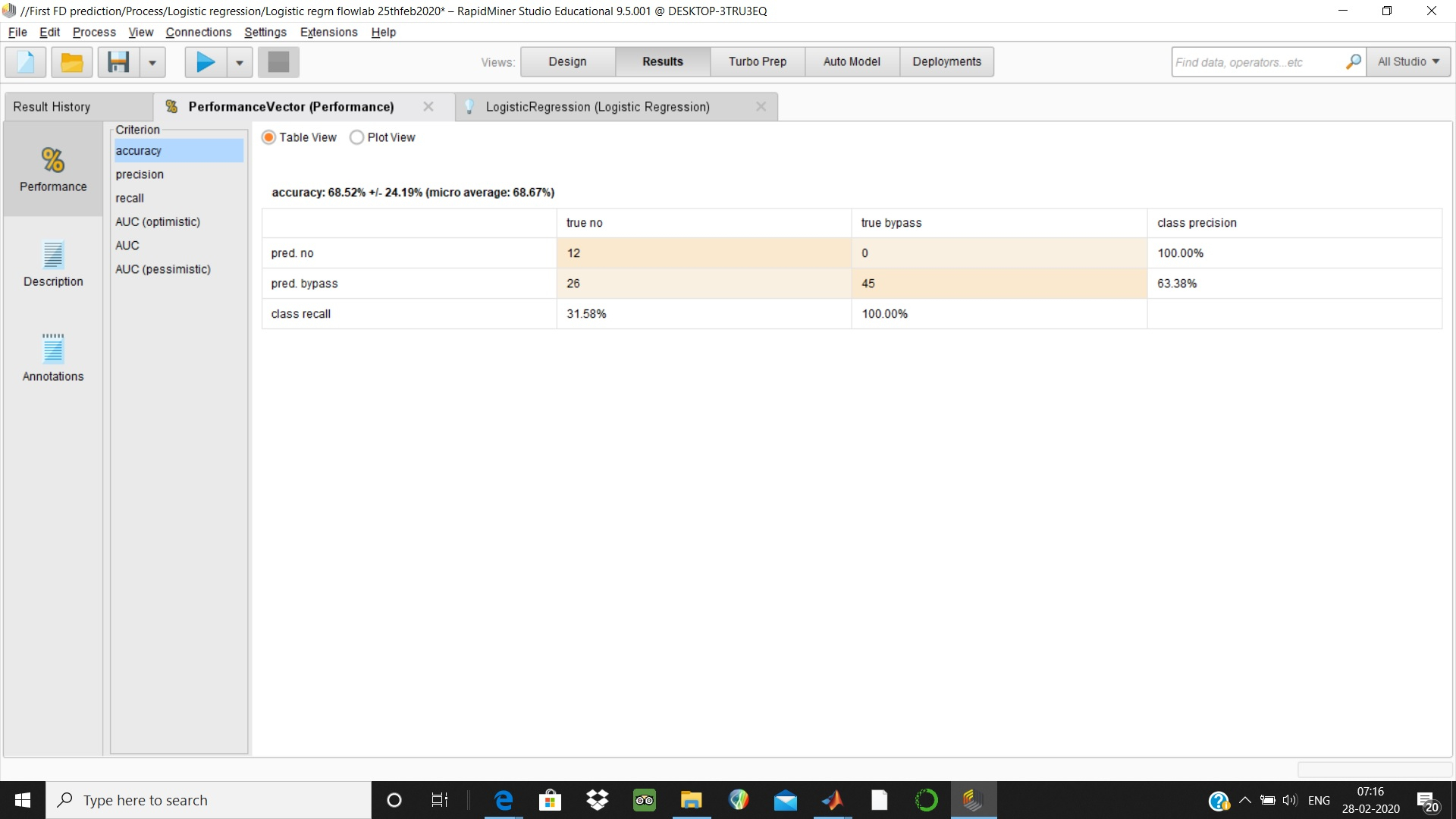

At first instant - I get accuracy of 68.52% with 100% recall.

subsequently with regularisation - I get accuracy of 98.77% with 100 % recall.

1. can you elaborate , how regularisation leads to this much jump in better accuracy. can you explain the basics behind this rapidminer option.

2. I couldn't see lamda value in result. Is there any way to get it displayed.

3. Both the cases , I get 100% recall. ( zero false negative, which is desirable in my case.).

But Im not sure, whether it is a good model.

I achieved above after normalisation and cross validation. Im enclosing

both results. thanks

regds

thiru