Hello,

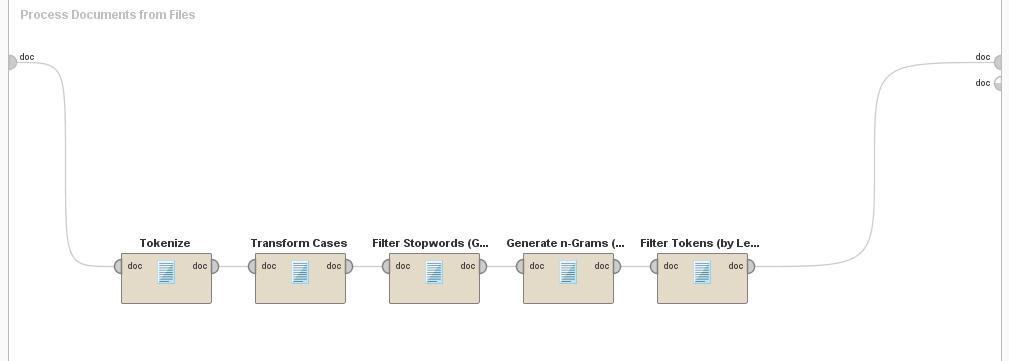

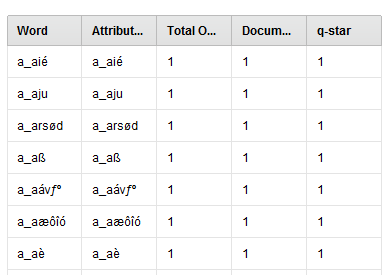

I'm trying to do text mining with a large excel table with many text entrys (many words in a cell). Unfortunately my "Process Documents from Files" breaks my text into a mixture of symbols and letters.

I aktually do not know why it is doing that, but also my word list looks like that.

Can you tell why this happens?

Thanks a lot

Imke