Hi,

I am newbie here. Let me give you an overview of what I am doing.

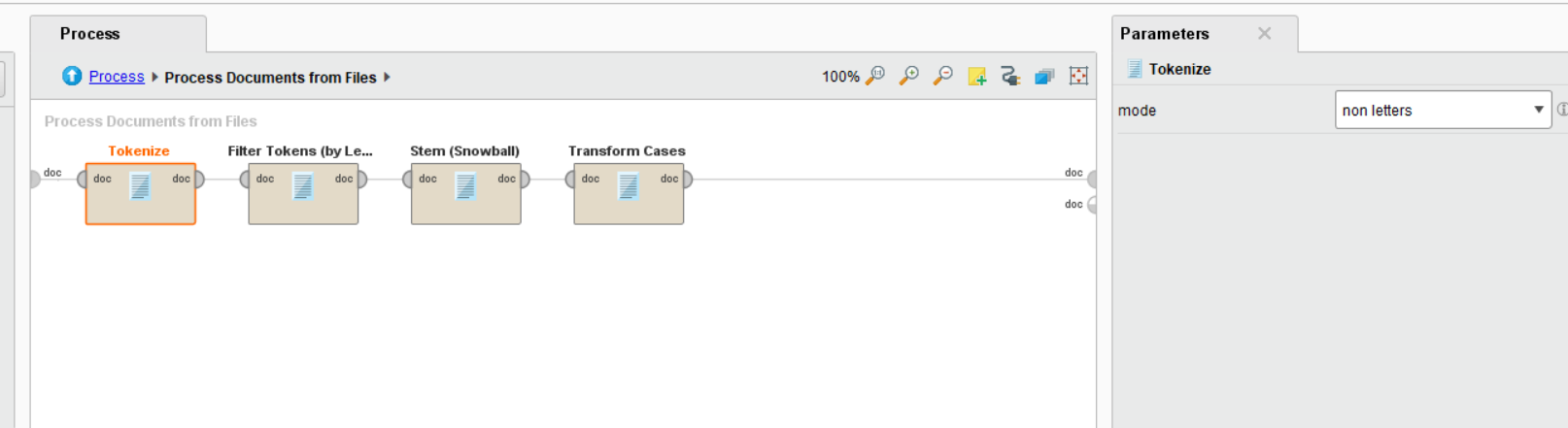

I am processing PDF files (generally trend reports) and want to create association rule for them. As I saw a tutorial, where someone converted pdfs into txt files in order to process it. I converted thoese PDF's online to text files and tried to excute them. I did it like this way:

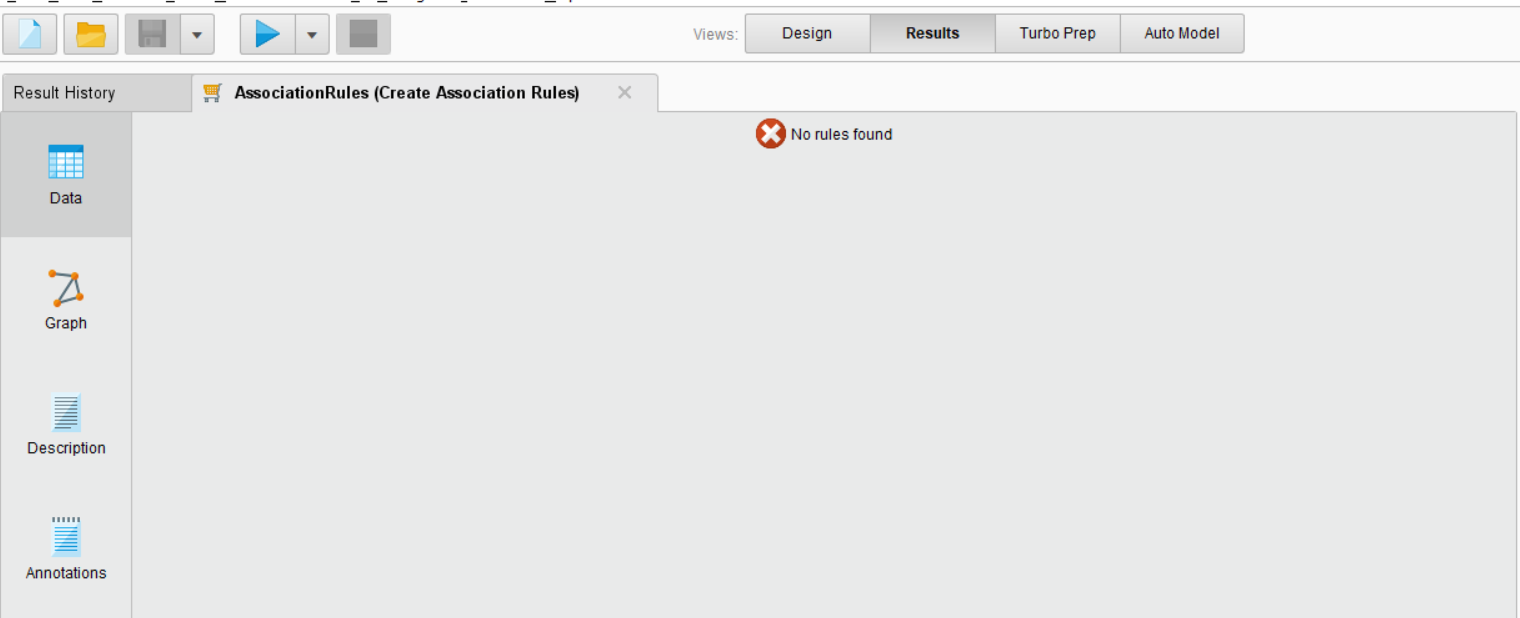

First, I didn't got any association rule in a result.

Then I tried to play around with the parameters of text processing, changing "PruneMethod" absoulte to percental or degree, but I got memory error, even though I have around 450GB free space. And those converted PDF files are only 7.

Please guide me how to get association rules.

Kind Regards,

Rashid