Hi guys,

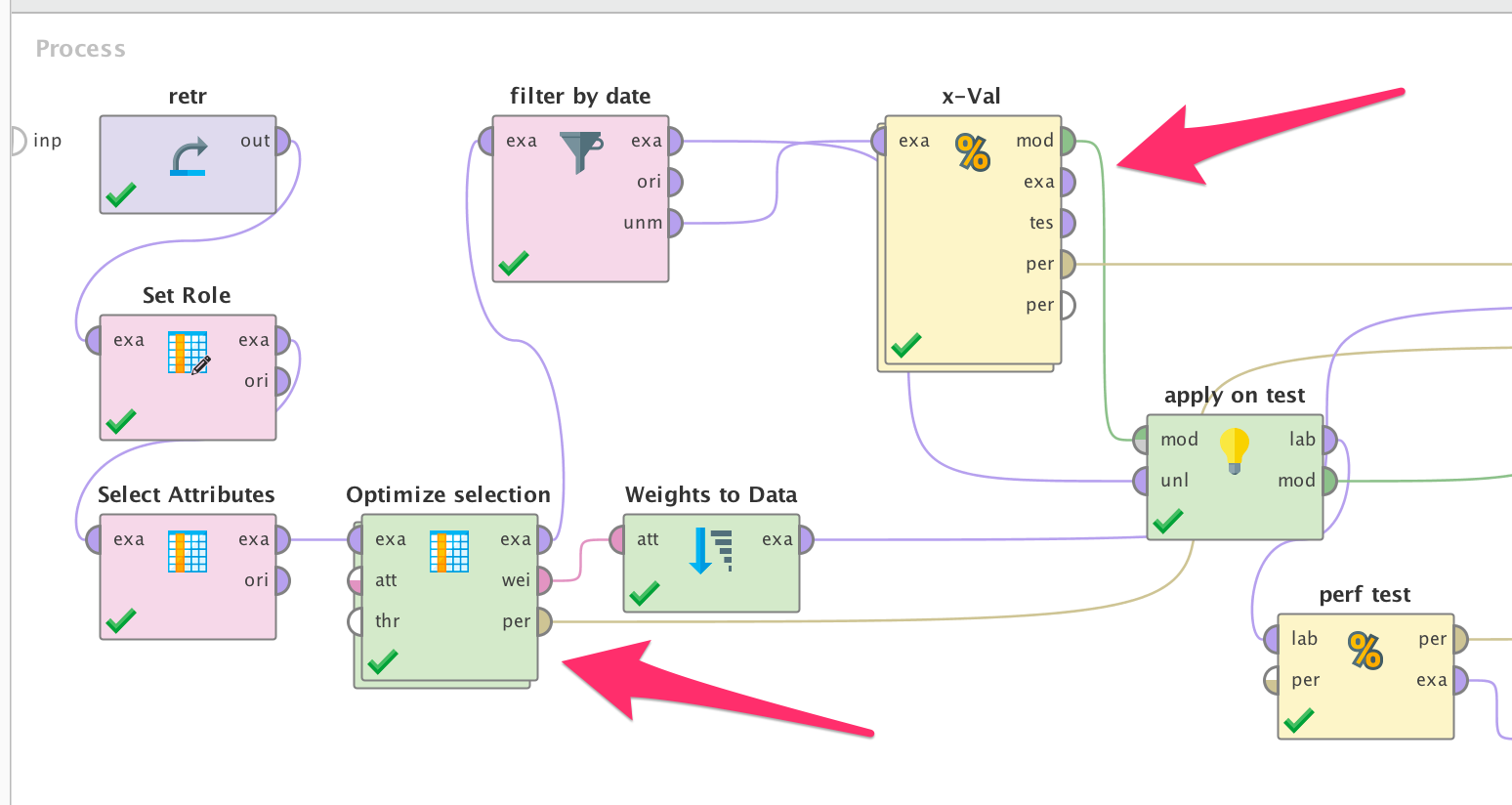

I have a process where I am performing evolutionary selection optimization and validate the model in normal way using x-validation and then apply it to test data. The model I am training is GBT with certain parameters, so it sits inside x-Val.

In fact, "Optimize selection (Evolutionary)" is a nested operator too, which contains another validation process with another learner inside. Initially I used the same GBT learner inside optimization.

My question is: do I necessarily have to use the identrical learners with the same parameters in both nested operators marked with arrows? I have experimented a bit and got slightly different results, though the difference is pretty subtle. For example, if I use random forest inside optimization, it selects slightly different set of parameters, which affects final performance, but I wouldn't say it is decreased dramatically.

So I would like to have an advice more like of a 'best practice': is it essential to have identical learners in that case or this does not matter much at the end? Or, in other words, does type of learner used inside evolutionary optimization matter, and how much?