Hi guys,

I am doing a project where I need to create decision tree using Python and then embed it in Rapid Miner using Execute Python operator.

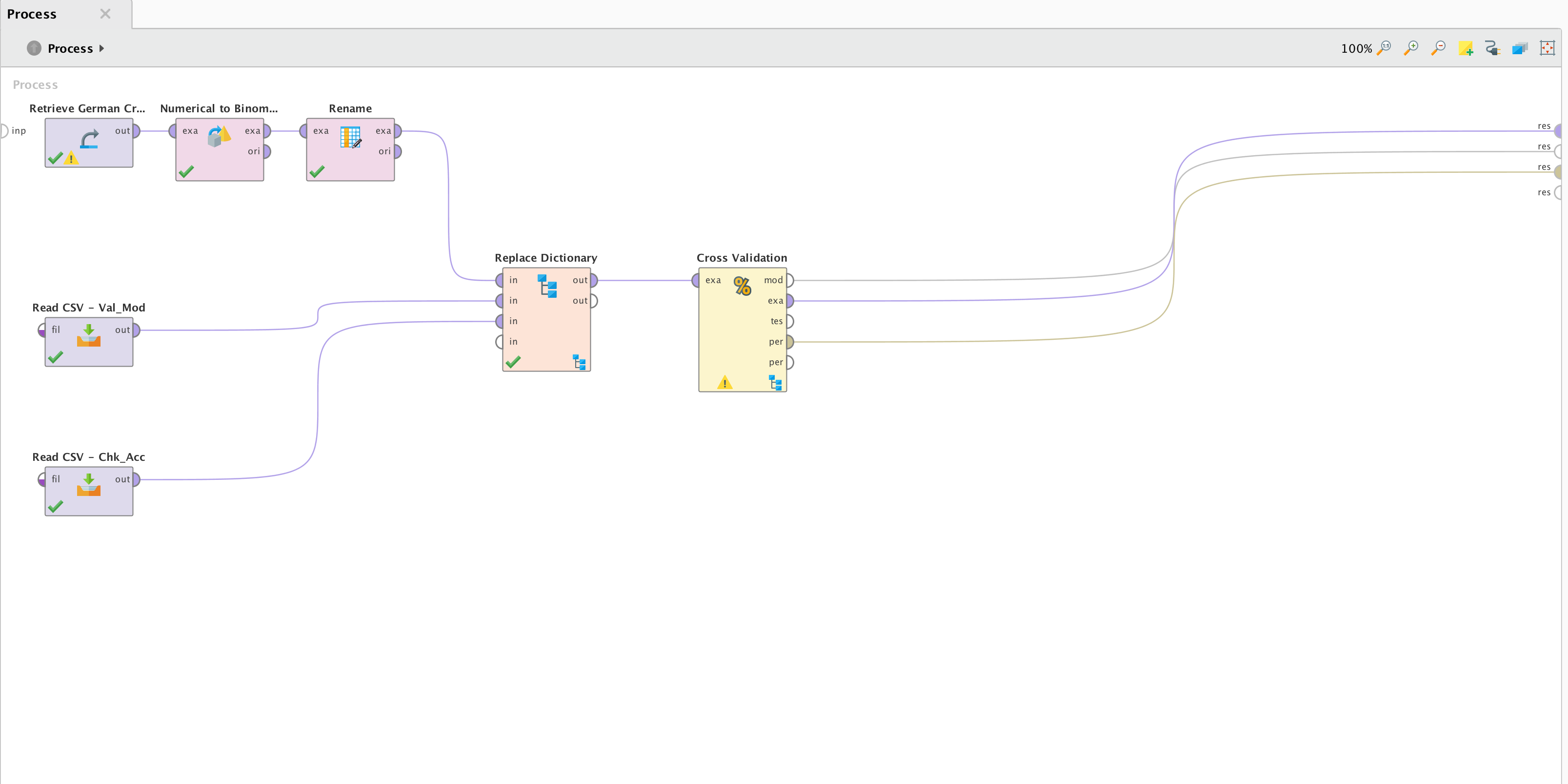

These are screenshots of my process:

Subprocess in Cross Validation

Subprocess in Cross Validation

This is my code for the decision tree:

import numpy as np

import pandas as pd

from sklearn.cross_validation import train_test_split

from sklearn.tree import DecisionTreeClassifier

from sklearn.metrics import accuracy_score

from sklearn import tree

# rm_main is a mandatory function,

# the number of arguments has to be the number of input ports (can be none)

def rm_main(data):

#import data

file = '04_Class_4.1_german-credit-decoded.xlsx'

xl = pd.ExcelFile(file)

print(xl.sheet_names)

#load a sheet into a DataFrame

gr_raw = xl.parse('RapidMiner Data')

#create arrays for the features, X, and response, y, variable

y = gr_raw['Credit Rating=Good'].values

X = gr_raw.drop('Credit Rating=Good', axis=1).values

#split data into training and testing set

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=50)

#build decision tree classifier using gini index

clf_gini = DecisionTreeClassifier(criterion='gini', random_state=50, max_depth=10, min_samples_leaf=5)

clf_gini.fit(X_train, y_train)

return clf_gini

When executed it gives me an error, I am not sure which part of this code that I should ignore for a successfule execution.

Would appreciate any advice or help on this!

Thank you.

Regards,

Azmir F